HYPER-FIXXXATION

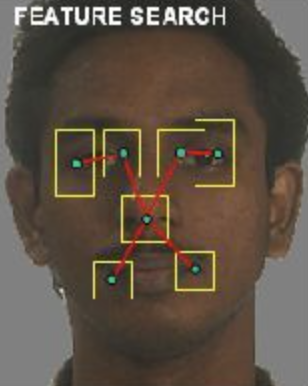

Deep fakes are images that are virtually indistinguishable from the original, and easily used for manipulation or misinformation. In 2022, the Cyberspace Administration of China (CAC) issued new rules for content providers that alter facial and voice data after a face-changing app called ZAO gained popularity in the region. ZAO collects a user’s facial information and also obtains their personal contact information. The vulnerability of a user’s personal and financial data becoming endangered to data hacks led to legislators securing regulations to prevent potential breaches and industry collapse. Despite the warnings from this Eastern superpower, the United States has pressed on with the technology.

Apple Vision Pro — a new augmented reality headset — has spurred discourse around whether the device is dystopian or revolutionary. Coined as “unveiling the future,” the headset includes an artificial intelligence feature (A.I.) that can project a live-stream deep fake onto any person’s face. Rather than speaking to characters from The Office instead of your real life coworkers, Apple made it possible to fulfill that delusion.

Christian Guyton, a U.K. based computer editor and contributing writer for TechRadar, believes that the addition of deep fake facetime corrupts the natural ability to connect and build relationships with other human beings.

“Video calls ushered in a new era of long-distance intimacy in our society, taking us a step beyond phone calls and giving us the ability to look into the eyes of our loved ones,” says Guyton.

“If you FaceTime me with Apple's new Vision Pro headset, I'll end our friendship.”

People have the right to use their imagination to create their own fantasies but the latest updates in A.I. generated materials strip away the rights of a person to star in the actualization of them. Deep fakes and A.I. generated images have been criticized for interfering with political elections and becoming a superspreader of misinformation. Deepfake technology makes fake videos appear authentic, thus, empowering lies. However, the at-large social concern is that there is no guarantee for consent. What happens when things become personal?

Nonconsensual pornography refers to the distribution of sexual or pornographic images of individuals without their consent. This may include images of any kind taken without consent or images taken with consent but later distributed without the consent of participants in the images. These images are sometimes referred to as revenge porn. Charlotte Laws, an anti-revenge porn activist, gained international attention in 2010 for speaking out against Hunter Moore, also known as “the most hated man on the internet” and his website IsAnyoneUp?, a site for user-submitted pornography. The site quickly became an abuse incubator, scorned users deliberately posting nudes and videos of former lovers, non consensual explicit recordings uploaded for thrills and community. A real down-at-the-heel crowd. In 2015, social media platform and online bulletin site, Reddit, banned the posting of sexually explicit content without the consent of those in the images. In March 2022, as part of the Violence Against Women Act Reauthorization Act of 2022, Congress passed a federal law on this subject, allowing an individual to file a federal lawsuit against any person who disclosed intimate images without that individual's consent. Previously, the Communications Decency Act (1996) regulated pornography on the internet, protecting websites and service providers from liability for content posted by users that they are not co-creators of. Currently, 48 out of the 50 U.S. states have nonconsensual pornography laws excluding Massachusetts and South Carolina.

Still, the solution for an abuser at the gate of a boundary is to forge it. Deep fake technology wittingly cooperates and enables this behavior. Deep fakes present an opportunity for a user to create an alternative reality in which they can simulate the words, expressions and actions of another person. If this same sense of controlling were to happen in real life in between real people, the power dynamics would be identified as abuse. However, for now, an A.I.-generated image is just a collection of neural networks based on a user’s prompts. The prompts can be as open-ended or specific and ultimately is at service to the user’s desires (Example: “create an image of Uncle Sam if he were a Drag Queen contestant on RuPaul’s Drag race for a Tina Turner tribute episode”). Despite the image being based on a real person, the person’s autonomy of their body and mind is altered once it is retrofitted. From who you are to what you are.

"One of the most disturbing trends I see on forums of people making this content is that they think it's a joke or they don't think it's serious because the results aren't hyperrealistic, not understanding that for victims, this is still really, really painful and traumatic," said Henry Ajder, an expert on generative A.I.

Concerns for this unregulated technology as a dehumanization tool have resurfaced into the mainstream when sexually explicit A.I.-generated images of Taylor Swift circulated on X, formerly Twitter. The images on X attracted more than 45 million views, 24,000 reposts, and hundreds of thousands of likes and bookmarks before the verified user who shared the images had their account suspended for violating platform policy. The post was live on the platform for about 17 hours prior to its removal. It’s unknown if screenshots of these images or its retrofits are being flagged and removed. The term “Taylor Swift AI” became featured as a trending topic on social media, promoting the images to wider audiences. According to Deeptrace, a company specialized in A.I., estimated in 2019 that 96% of deep fake videos were porn videos made without the consent of the featured person.

This violation speaks to the very real challenge of preventing deep fake porn and A.I.-generated images of real people. It should not be much of a shock that this particular trend targets women and children. Also, this behavior puts into perspective the harmful repercussions of A.I. photorealism. The responsibility of preventing fake images, news, and other media content from spreading often falls through the cracks on social platforms, where users act as both the curator and responder — something that can be difficult to regulate under the best of circumstances and with smartphone screen recording capabilities an even harder safety guard to manage.

The more that authenticity is attacked, the less shared experiences we will have with one another, and what is “real” will become harder to define as the truth. The “social identity” attribute makes us easily fooled by biased or even fake content. It can also be extremely harmful to have your essence reimagined and manifested into a version of you that has never existed under your authority. Today, big tech has made the first incision of the tethering of the mind and body.